How to fix "Indexed, though blocked by robots.txt"

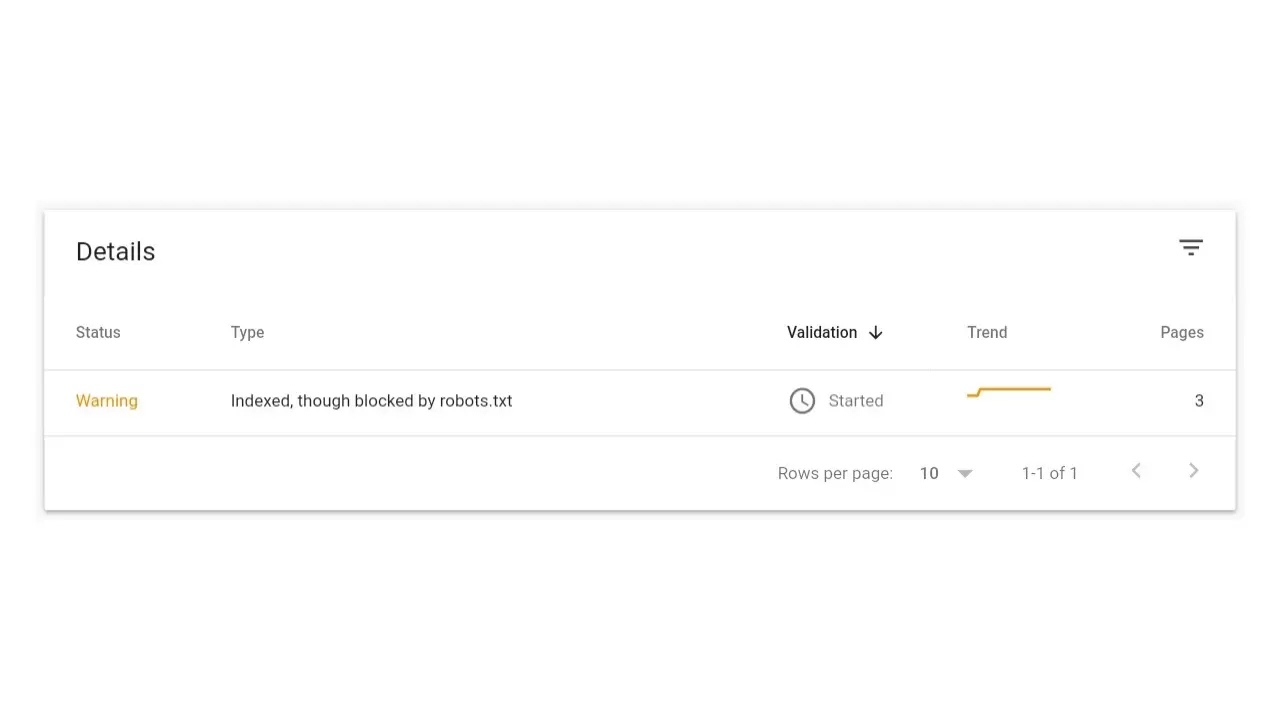

Troubleshooting Warning Indexed Even if Blocked by Robots.txt or Indexed, though blocked by robots.txt usually appears in the Google Search Console (new version console) especially for blogger platform blogs.

If we check all the URLs that get this message (indexed, even though they are blocked by robots.txt), they are all Search pages, that is for the Search Label page and also the navigation page of the old post. But most of the cases that appear are on the search page.

Why is it indexed Even though it is blocked by robots.txt

When you get an Indexed message, even though it's blocked by robots.txt in the Google Search Console, you're likely to panic hearing the words "blocked" and "negative impact" on the message. Looks like this will be a big deal for you.

You should know, that the message contained in the Google Search Console is in nature only a warning alias warning or just a notification. Not something that should always be fixed. Therefore, the warning reads, "We encourage you to review and consider fixing this issue." So it needs to be reviewed and considered. That is, after reviewing and considering that it is not a problem then it does not need to be responded to.

For this case, there are 2 types of scenarios that cause it. Among them are:

1. If using the default robots.txt

Now try to check on the robots txt that your blog uses by accessing a link like the example below:

https://Phamdom.blogspot.com/robots.txtUsually the appearance of the robots txt that appears will be similar to the one below before being set to [default]

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow:/search

Allow:/

Sitemap: https://Phamdom.blogspot.com/sitemap.xmlThe robots.txt above clearly indicates that all Search pages are not allowed to be crawled by bots. why is it still being browsed by robots?. This is because the search page is related to other pages such as breadcrumbs, label widgets, next and prev page navigation.

For example in google search results when getting a warning message indexed but blocked by robots

Command Disallow: / means to prohibit bots / search engines from crawling the page or directory. The file command above Disallow: /search means to prohibit search engines from crawling the /search page. Example URL:

https://example.com/search?q=phone+distribution

https://example.com/search/label/write?updated-max=2021-07-20T22:31:09-10:37&max-results=100start=20&by-date=falseWhy is this deliberately banned by blogger robots? Because it does not need to be indexed or crawled. This search page is an unlimited page, so it will spend the quota if crawled or indexed. In fact, crawling and indexing should give priority to the posting page.

The story will be different if the search url is on another web link. It is possible that google will crawl and index it and enter it in the google search list.

Remember, indexing and crawling are two different things.

Well, this is what later became the seed of the case of "Indexed, Even Blocked by robots.txt". This is probably because you installed the default robots.txt and your search page was found on someone else's blog (maybe planting backlinks etc.)

Need to worry about URLs /search being indexed? No. This has no effect on the site's overall performance in search results. And rarely does a URL /search rank higher than a post page.

So in conclusion, for those of you who use the default robots.txt, the message in the Search Console is not a problem. Can be ignored.

2. If you enabled custom robots.txt

For those of you who are tweaking your robots.txt settings and custom robots.txt header tags, you'll need to check the URLs mentioned in the Search Console one by one.

Do you want that URL;

(1) not crawled but can be indexed, or

(2) not crawled and not indexed?

If option number (1), it means everything is safe. OK. Notifications in the Search Console can be ignored because they are intentional.

If option number (2) is that you do not want the URL to be indexed and appear in search results, then do not use the robots.txt command. Use the 'noindex' meta tag or the noindex command (X-Robots-Tag HTTP header). Or you can also create a password in the directory or page. And in robots.txt it should allow crawling to that URL. Otherwise, Google will not be able to read its 'noindex' tag. And the problem is solved.

To learn more about resolving this error warning message, we continue below.

How to Troubleshoot Indexed, though Blocked by Robots.txt

After reading the description above, there may be those who no longer think about the problem of Indexed, though blocked by robots.txt in this Google Search Console. But for those of you who still want to fix the problem of messages being indexed even though they are blocked by this robots txt, please take a good look at the following tutorial

Please replace robots.txt with the following code if you are using robots.txt as above. Or if you don't want to bother scrolling up this code:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://example.com/sitemap.xmlReplace with this sitemap code

User-agent: *

Disallow:

Sitemap: https://Phamdom.blogspot.com/sitemap.xml

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: https://telecomyaar. blogspot.com/feeds/posts/default

Sitemap: https://Phamdom.blogspot.com/sitemap-pages.xmlPlease replace the code marked with your blog's domain.

For the following code, create a new line if your blog posts are already above 500.

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=501&max-results=500So on, if the post is already above 1000, then create a new line again as follows:

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=1001&max-results=500So when inserted into a custom sitemap, it looks like this:

User-agent: *

Disallow:

Sitemap: https://Phamdom.blogspot.com/sitemap.xml

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=501&max-results=500

Sitemap: https://Phamdom.blogspot.com/atom.xml?redirect=false&start-index=1001&max-results=500

Sitemap: https://Phamdom.blogspot.com/feeds/posts/default

Sitemap: https://Phamdom.blogspot.com/sitemap-pages.xmlPlease change https://Phamdom.blogspot.com with your domain

Then please save the following noindex meta tag code in the <head> section of your blog to block bots on archive, search, label pages and not display them on Google search results pages.

<b:if cond='data:view.isArchive'>

<meta content='noindex,noarchive' name='robots'/>

</b:if>

<b:if cond='data:blog.searchQuery'>

<meta content='noindex,noarchive' name='robots'/>

</b:if>

<b:if cond='data:blog.searchLabel'>

<meta content='noindex,noarchive' name='robots'/>

</b:if>or you can also use the latest conditional tags like this

<b:if cond='data:blog.pageType in {"archive"} or data:blog.searchLabel or data: blog.searchQuery'>

<meta content='noindex,nofollow, noarchive,nosnippet,noimageindex' name='robots'/>

</b:if>Now try submitting your latest robots.txt in blogger settings. Then log in to the Console and validate the Indexed warning, even though it is blocked by robots.txt and please continue to monitor the Search Console. The results may be indirect, it usually takes a maximum of 3 days for the report to appear. Notifications will appear via email.

So this article about how to solve the problem of being indexed, even though it is blocked by robots.txt on the blogspot platform.

Komentar

Posting Komentar